Journal Topic Organiser

September 16, 2024

A journal is a good time.

I've been keeping a journal for a while now, and it's been a great way to reflect. A typical day's entry could be about lots of things. Old stories with friends, the odd recipe, thoughts on a subject, or little reminders I might need to remember once in a while. There are other times that an entry is about one thing, usually a lot of rubber duck coding logs when I'm working on one thing all day.

A topic journal is also a good time.

Entries were enjoyable to write, and were good for general reflection, but it took a lot of digging to find specific things.

Then I stumbled across an article on the benefits of daily journals and topic journals by Derek Sivers. Derek talks about keeping two separate journals, one for daily entries and another with many files, each for topics that you have ongoing thoughts on. It's a rad idea.

But a manual transfer between the two is not a good time 😔

It's a rad idea and did work for a while, but having multiple documents to jump through became too distracting. I wanted all the topics I was writing about today on a single page. This sparked a question that seemed like it had a programmatic solution:

How can I get the benefits of a topic journal, while only having to write in a single entry?

So I built a journal topic organiser.

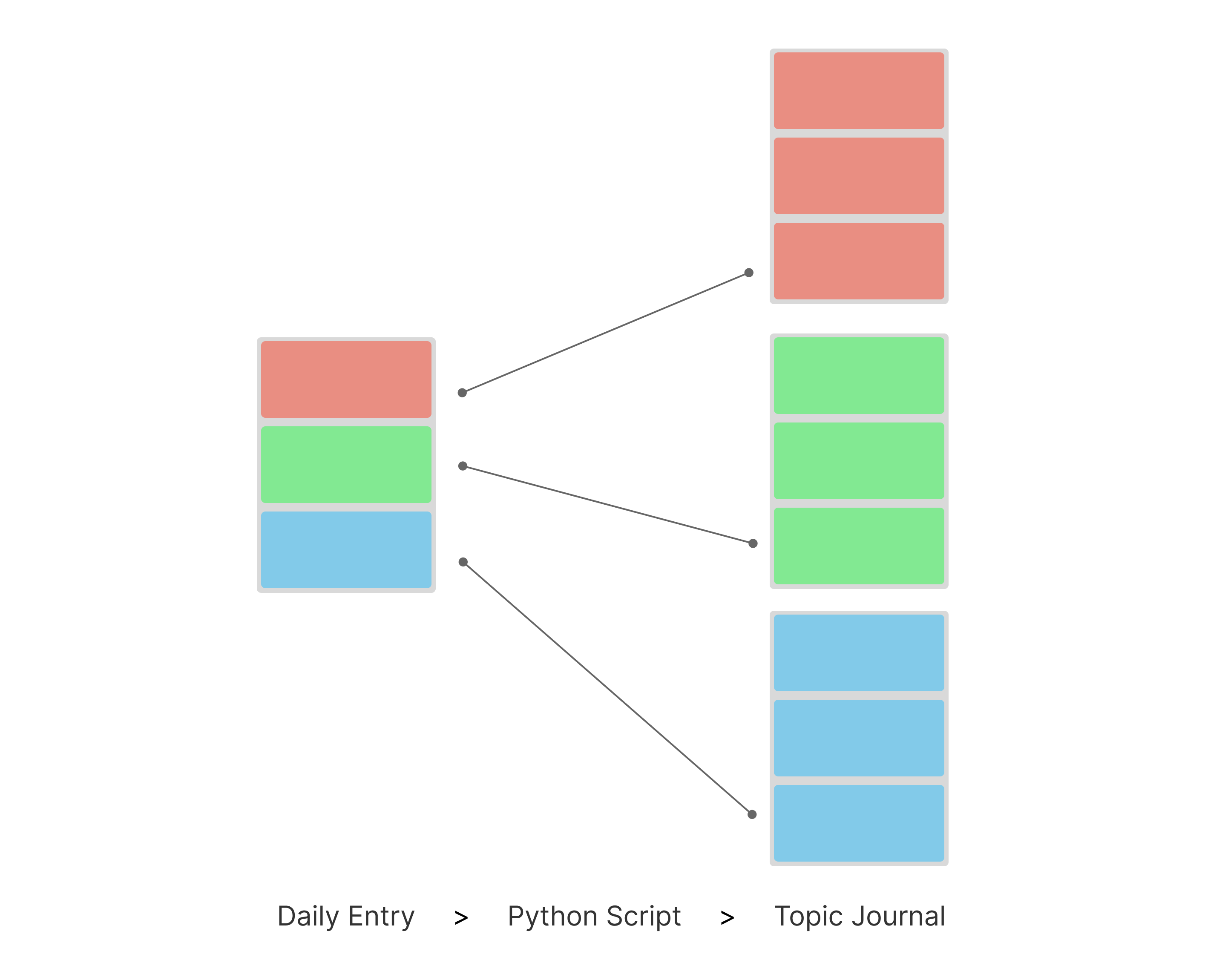

The idea was to run a script that copied the contents of one entry into multiple topic files. The key to knowing where to separate would be based on headings denoted by a symbol, in this case, "---" before and after each heading.

So, at the end of each day, I'd run this script, select the entry and the script would sort topics from that day into the topic journal. Easy as.

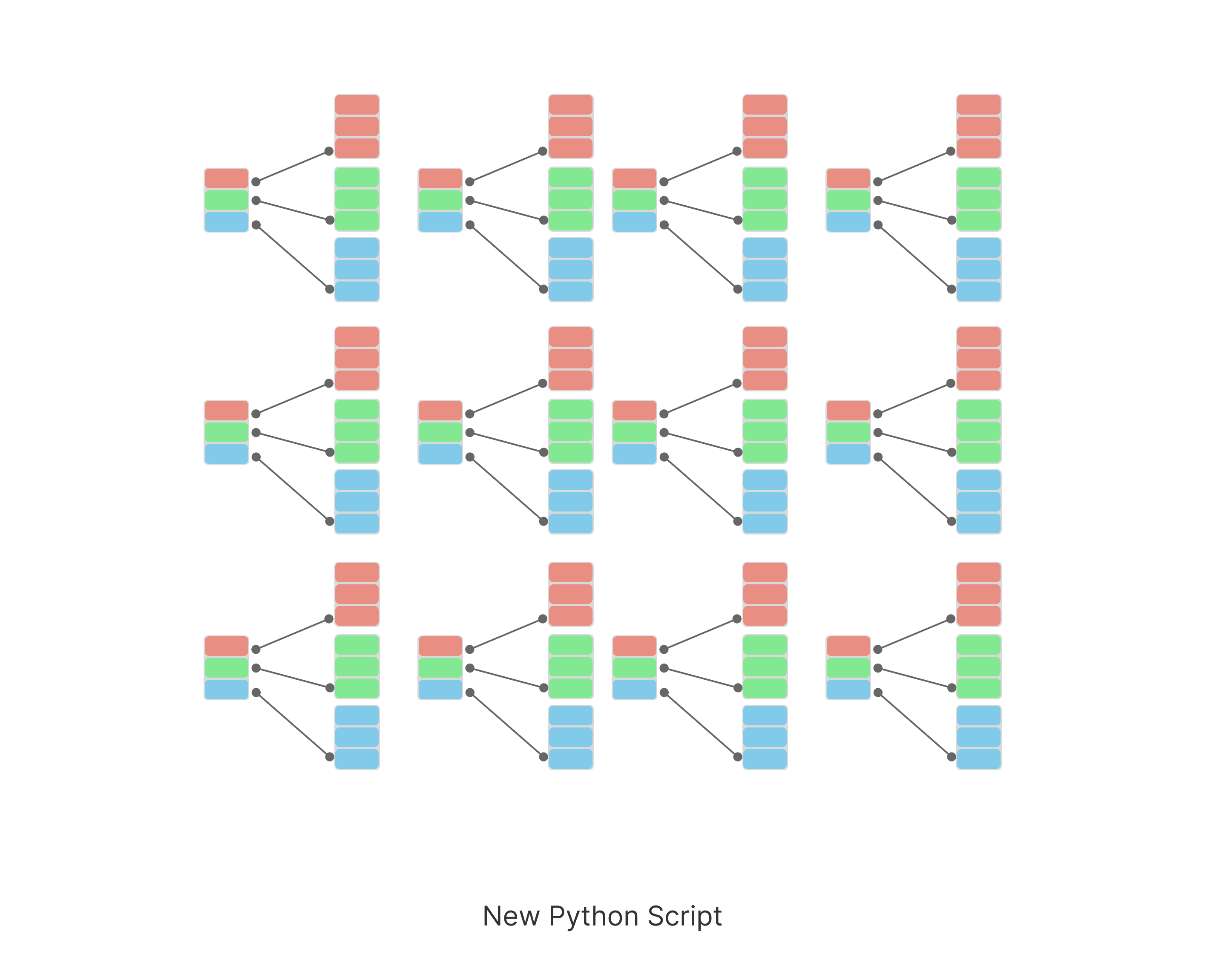

What ended up happening in reality was I would forget to run the script and a big stack of unsorted entries would pile up. Eventually, I'd get them all done in a batch, selecting each file when prompted, then check that all the topics had been copied correctly and move on to the next day. It was automated, but only a little bit.

I'll admit, this semi-manual process was relaxing, copying and checking these files. But after a while, these batches felt less like good record keeping and more like programming procrastination. So I set about sorting out the copy kinks and automating the batch process.

Now with the upgraded script all one has to do is set the date range, and it processes all the entries in one go. In the old version, it would've been a nightmare for someone without my weird OCD tendencies to deal with. Now with the batch automation, I'd say it's super useful for anyone who keeps a text file journal. Here's a breakdown of how the code works:

Modules

import re

import os

from datetime import datetime

from tkinter import Tk, filedialog

The script uses four modules: re, os, datetime and tkinter.

The re module provides support for regular expressions in Python. It's how the script does its pattern matching and string manipulation, like finding the topic headings.

The os module lets the script interact with your computer's operating system. That sounds a little scary, but it's how the script finds the entries you want to sort into your topic journal.

The datetime module is used to work with, wait for it, dates and times. More specifically, it establishes each entry's date and also the checks the date range of entries to add to the topic journal.

The tkinter module is used to create a graphical user interface (GUI) so the user can select the journal entries folder. It just seemed easier than hard coding a folder destination so if someone else wanted to use the script, all they'd have to change would be the date range.

There are two classes being used from the tkinter module. Tk initialises the GUI, and filedialog provides functions to open file dialogs, the bit that lets a user choose a file from a system.

Security

def sanitise_path(path):

# Normalize the path

path = os.path.normpath(os.path.abspath(path))

# Ensure the path is within the allowed directory

if not path.startswith(os.path.abspath(input_folder_path)):

raise ValueError("Access denied: Path is outside the allowed directory.")

return path

The sanitise_path function is a security check. It normalises the file path given to the script when its looking for a journal entry. The security benefit is that this stops a user jumping through the directory tree with relative path components (.. or .), restricting access to the directory the user can see in the GUI.

def sanitise_filename(filename):

# Remove any path component and potentially dangerous characters

filename = os.path.basename(filename)

return re.sub(r'[^\w\-_\. ]', '_', filename)

The sanitise_filename function is another security check. It replaces potentially dangerous characters in the filename with underscores, in a scenario where a malicious filename could lead to security vulnerabilities.

Extract Sections Function

def extract_sections(input_file):

with open(input_file, 'r') as file:

lines = file.readlines()

section_dict = {}

current_heading = None

current_content = []

for line in lines:

match_heading = re.match(r'^---\s*([A-Za-z0-9\s\'\-&+\/\(\):.!]+)\s*---$', line.strip())

if match_heading:

if current_heading is not None:

section_dict[current_heading] = current_content.copy()

current_heading = match_heading.group(1).strip()

current_content = []

elif current_heading is not None:

current_content.append(line.rstrip())

# Capture the last section

if current_heading is not None and current_content:

section_dict[current_heading] = current_content.copy()

return section_dict

The extract_sections function reads a text file and organises it's contents into a section dictionary object which we've called section_dict. Here's a more specific breakdown:

with open(input_file, 'r') as file:

lines = file.readlines()

this opens the specified input_file in read mode 'r' and reads each line of the journal entry into a list called lines. it's the first step of looking for a topic heading.

section_dict = {}

current_heading = None

current_content = []

These three variables make up the dictionary object that was mentioned before. As the script sifts through the lines list, it builds the dictionary into three parts.

- section_dict: a dictionary to store each part of the journal entry into topic chunks.

- current_heading: the line identified bythe script as a heading. current headings for the keys of the dictionary object.

- current_content: an array of lines from the entry that form the values of the dictionary object.

for line in lines:

match_heading = re.match(r'^---\s*([A-Za-z0-9\s\'\-&+\/\(\):.!]+)\s*---$', line.strip())

the function iterates over each line in the lines list. It uses a regular expression to check if the line matches a specific heading format, which is enclosed in ---. For example, --- Heading ---.

if match_heading:

if current_heading is not None:

section_dict[current_heading] = current_content.copy()

current_heading = match_heading.group(1).strip()

current_content = []

As the function goes through/iterates over each line of the entry, it looks for headings. If it finds a heading, it checks if a current heading is already set. If there is a current heading, it saves the accumulated lines of content in the current_content array to the section_dict object using the current_heading as the key. Then, it updates current_heading with the new heading and resets current_content to start collecting lines of content for the new section.

elif current_heading is not None:

current_content.append(line.rstrip())

If the line does not match a heading and there is a current_heading, it appends the line (stripped of trailing whitespace) to current_content.

if current_heading is not None and current_content:

section_dict[current_heading] = current_content.copy()

return section_dict

After processing all lines, if there is still a current_heading and current_content, it saves the last topic chunk to section_dict. Then it returns the dictionary, which contains all the headings and their corresponding content.

Date Range Function

def get_files_in_date_range(folder_path, start_date, end_date):

files = []

for root, dirs, filenames in os.walk(folder_path):

for filename in filenames:

if filename.endswith('.txt'): # Adjust file extension if needed but remember to tweak other '.txt' aspects accordingly

file_path = os.path.join(root, filename)

creation_date_str = get_entry_date(file_path)

try:

creation_date = datetime.strptime(creation_date_str, "%d%m%y")

if start_date <= creation_date <= end_date:

files.append(file_path)

except ValueError:

# Skip files that don't match the expected date format

continue

return files

The get_files_in_date_range function searches for text files, (the journal entries) within a specified directory and return those that were created within a given date range, which we pop in via parameters. The end result returns a list of files that fits the date range.

def get_files_in_date_range(folder_path, start_date, end_date):

The function takes three parameters:

- folder_path: The directory to search for files.

- start_date: The beginning of the date range (as a datetime object).

- end_date: The end of the date range (as a datetime object).

files = []

An empty array/list called files is initialised to store the paths of files that meet the date criteria.

for root, dirs, filenames in os.walk(folder_path):

The function uses os.walk() to traverse the directory tree starting from the given folder in folder_path. It yields a tuple i.e. a data structure with multiple parts, for each directory it visits. This is what the tuple looks like:

- root: The current directory path.

- dirs: A list of subdirectories in the current directory.

- filenames: A list of files in the current directory.

for filename in filenames:

if filename.endswith('.txt'):

The function iterates over each filename in the filenames list and checks if it ends with the .txt extension, indicating it's a text file.

file_path = os.path.join(root, filename)

If the file is a text file, it constructs the full file path by joining the root directory with the filename.

creation_date_str = get_entry_date(file_path)

The function calls get_file_creation_date(file_path), a function we'll talk about later, to retrieve the creation date of the file as a string.

try:

creation_date = datetime.strptime(creation_date_str, "%d%m%y")

if start_date <= creation_date <= end_date:

files.append(file_path)

except ValueError:

# Skip files that don't match the expected date format

continue

return files

As mentioned, the core task of this function is to build a list of all the files that fit within a certain date range. This mostly happens here, and it does this by attempting to parse the creation_date_str into a datetime object using the specified format ("%d%m%y"). the % symbol means a zero padded decimal number e.g. 01, 02, ..., 31. Then, if the parsing succeeds, and the creation_date falls within the start_date and end_date, the file path is added to the files list. If a ValueError occurs (indicating the date string does not match the expected format), the function skips that file and continues with the next one. Finally, after traversing all directories and files, the function returns the files list, which contains the paths of all text files created within the specified date range.

Entry Date Function

def get_entry_date(file_path):

# Extract the last 6 digits of the file name

file_name = os.path.basename(file_path)

creation_date = file_name[-10:-4] # Adjust file extension if needed but remember to tweak other '.txt' aspects accordingly

return creation_date

The get_entry_date function extracts the creation date from the file's name. In earlier versions, it pulled the creation date from the file. but hardcoded entry dates at the end of the entry title work better. This is because, every so often, I'll miss a day and want to write about it a day or two later. By using a hardcoded date, this function avoids the issue of when file creation date and the day I was writing about aren't the same.

def get_entry_date(file_path):

The function takes one parameter, file_path, which is the full path to the file from which the creation date will be extracted.

file_name = os.path.basename(file_path)

Then it uses os.path.basename(file_path) to obtain the base name of the file (i.e., the file name without any directory components). This is stored in the variable file_name.

creation_date = file_name[-10:-4]

Then it extracts a substring from file_name using slicing. Specifically, it takes the characters from index -10 to -4 (not inclusive). This means it retrieves the last 10 characters of the file name and takes the first 6 of those. For example, 'journalEntry{010724}.txt'

return creation_date

Finally, the function returns the extracted creation_date, which is expected to be a string representing the date.

Write Sections Function

def write_sections(output_folder, sections, creation_date):

for heading, content_lines in sections.items():

filename = f'{heading.lower().replace(" ", "_")}.txt' # Adjust file extension if needed but remember to tweak other '.txt' aspects accordingly

output_file_path = os.path.join(output_folder, filename)

# Create a unique heading for this entry

unique_heading = f'---{heading}{creation_date}---'

if os.path.exists(output_file_path):

with open(output_file_path, 'r+') as file:

content = file.read()

if unique_heading not in content:

file.seek(0, 2) # Move to the end of the file

file.write(f'\n\n{unique_heading}\n')

file.write('\n'.join(content_lines))

else:

# File does not exist, create a new file with the heading

with open(output_file_path, 'w') as file:

file.write(f'{unique_heading}\n')

file.write('\n'.join(content_lines))

Up until this point, the functions are primed worked together at getting all the entries wrangled into the dictionary. The write_sections function is what gets the content out of the dictionary and into text files in a specified output folder.

def write_sections(output_folder, sections, creation_date):

The write_sections function takes three parameters:

- output_folder: The directory where the output files will be saved.

- sections: A dictionary containing headings as keys and lists of content lines as values.

- creation_date: A string representing the creation date to be included in the file heading.

for heading, content_lines in sections.items():

First, the write_sections function iterates over each key-value pair in the sections dictionary. heading is the key, i.e. the topic, and content_lines is the value, a list of lines associated with that heading, i.e. the writing. All this dictionary talk might sound familiar as this part of the function is built to work with the extract_sections function.

filename = f'{heading.lower().replace(" ", "_")}.txt'

output_file_path = os.path.join(output_folder, filename)

Then, write_sections constructs a filename by converting the heading to lowercase and replacing spaces with underscores, appending the .txt extension. The full output file path is created by joining the output_folder with the filename.

unique_heading = f'---{heading}{creation_date}---'

A unique heading for the section is created by enclosing the heading and creation_date in '---'. This helps to identify the section in the output file. This is so when you scroll through your topic journal, you can see the dates that entry was written.

if os.path.exists(output_file_path):

The function checks if a file with the constructed output_file_path already exists.

with open(output_file_path, 'r+') as file:

content = file.read()

if unique_heading not in content:

file.seek(0, 2) # Move to the end of the file

file.write(f'\n\n{unique_heading}\n')

file.write('\n'.join(content_lines))

If the file exists, this function opens the file in read and write mode ('r+'). It reads the current content of the file and checks if the unique_heading is already present. If the heading is not found, it moves the file pointer to the end of the file and appends the unique_heading followed by the new content lines. This second conditional is a bit of a failsafe, since the unique heading won't appear unless the date ranges are set to add entries that have already been added to the topic journal, but it happened all the time when I was doing it manually and it doesn't hurt to keep it in.

else:

with open(output_file_path, 'w') as file:

file.write(f'{unique_heading}\n')

file.write('\n'.join(content_lines))

If the file does not exist, it means this is the first entry on a topic and it opens a new file in write mode ('w'). It writes the unique_heading followed by the content lines to the new file.

So now all our functions are primed and it's time for execution.

Execution

if __name__ == "__main__":

# Use Tkinter to select the input folder path interactively

root = Tk()

root.withdraw()

input_folder_path = filedialog.askdirectory(title="Select Input Folder")

root.destroy()

output_folder_path = 'quests' # Call the output folder whatever you like, I called it 'quests' because I'm a big RPG nerd.

if not os.path.exists(output_folder_path):

os.makedirs(output_folder_path)

# Define date range

end_date = datetime(2024, 9, 13) # format is year, month, day

start_date = datetime(2024, 7, 1) # format is year, month, day

# Get files within the date range

input_file_paths = get_files_in_date_range(input_folder_path, start_date, end_date)

for input_file_path in input_file_paths:

input_file_path = sanitise_path(input_file_path)

sections = extract_sections(input_file_path)

creation_date = get_entry_date(input_file_path)

for heading, content_lines in sections.items():

safe_heading = sanitise_filename(heading)

write_sections(output_folder_path, {safe_heading: content_lines}, creation_date)

print(f"Processed {len(input_file_paths)} files.")

This main execution allows you to select an input folder, process the journal entries within a specified date range and write topics entry to the corresponding topic files in your topic journal.

if __name__ == "__main__":

This line checks if the script is being run as the main program. If true, the following code block will execute. It's a common Python idiom to allow or prevent parts of code from being run when the modules are imported.

root = Tk()

root.withdraw()

input_folder_path = filedialog.askdirectory(title="Select Input Folder")

root.destroy()

Here we initialise a Tkinter GUI application and call it 'root'. Then, root.withdraw() hides the main window since only a file dialog is needed. After that filedialog.askdirectory(...) opens a dialog for the user to select an input folder, storing the selected path in input_folder_path. Finally, root.destroy() closes the Tkinter application after the folder selection.

output_folder_path = 'quests' # Call the output folder whatever you like, I called it 'quests' because I'm a big RPG nerd.

if not os.path.exists(output_folder_path):

os.makedirs(output_folder_path)

Here we set the name of the output folder to 'quests'. You can call the output folder what you like, but I called it quests because I'm a big nerd. Then we check if the output folder exists, since if you're just building your topic journal, you might not have a folder ready to go and this takes care of that using os.makedirs().

end_date = datetime(2024, 9, 13) # format is year, month, day

start_date = datetime(2024, 7, 1)

Defines the date range for filtering journal entries. Make sure the entries you want to sort lie within and including these days.

input_file_paths = get_files_in_date_range(input_folder_path, start_date, end_date)

Next, the execution block calls the get_files_in_date_range function to retrieve a list of journal entries from the selected input folder that have an entry date within the specified date range. The resulting file paths are stored in input_file_paths.

for input_file_path in input_file_paths:

input_file_path = sanitise_path(input_file_path)

sections = extract_sections(input_file_path)

creation_date = get_entry_date(input_file_path)

Next, the execution block iterates over each file path in input_file_paths. For each file, it does the following:

- Calls sanitise_path() to ensure the entry's file path is safe and normalized.

- Calls extract_sections() to read the entry and organize its content into sections, stored in our trusty sections dictionary.

- Calls get_entry_date() to retrieve the entry date.

for heading, content_lines in sections.items():

safe_heading = sanitise_filename(heading)

write_sections(output_folder_path, {safe_heading: content_lines}, creation_date)

Then, for each section in sections, the execution block iterates over the topic headings and their corresponding content. For each section, it does the following:

- Calls sanitise_filename() to create a safe version of the heading for use as a filename.

- Calls write_sections() to write the section content to the output folder, using the heading and creation date.

print(f"Processed {len(input_file_paths)} files.")

After processing all files, it prints a message indicating how many files were processed. And if all went to plan, you have a freshly updated topic journal to organise those sparkling thoughts.

Final thoughts

If you've made it this far, congrats, you are well on your way to being a file parsing wizard and python timelord. I enjoyed building this, it let me stick with a simple text file system as opposed to going for a more complicated notes app with all the bells and whistles. After all, text files will work a lot longer than I will. The script adds a bit of convenience, a touch of automation salt in the journaling stew. If you'd like to try the script, check it out on Github. Happy journaling!